I will argue that it is a category mistake to regard mathematics as “physically real”. I will quote D. E. Littlewood from his remarkable book “The Skeleton Key of Mathematics”:

“A trained sheepdog may perceive the significance of two, three or five sheep, and may know that two sheep and three sheep make five sheep. But very likely the knowledge would tell him nothing concerning, say horses. A child learns that two fingers and three fingers make five fingers, that two beads and three beads make five beads. The irrelevance of the fingers or the beads or the exact nature of the things that are counted becomes evident, and by the process of abstraction the universal truth that 2+3=5 becomes evident.”

I can just hear someone pouncing on Littlewood’s phrase “universal truth”, but that is not the reason I quote this paragraph. I quote it to remind that the essence of mathematics is never in the particular way it is represented, but in the concept that it brings forth, and the unification of particulars that it embodies.

One might hypothesize that any mathematical system will find natural realizations. This is not the same as saying that the mathematics itself is realized. The point of an abstraction is that it is not, as an abstraction, realized. The set { { }, { { } } } has 2 elements, but it is not the number 2. The number 2 is nowhere “in the world”.

I argue that one should understand from the outset that mathematics is distinct from the physical. Then it is possible to get on with the remarkable task of finding how mathematics fits with the physical, from the fact that we can represent numbers by rows of marks | , ||, |||, ||||, |||||, ||||||, …

(and note that whenever you do something concrete like this it only works for a while and then gets away from the abstraction living on as clear as ever, while the marks get hard to organize and count) to the intricate relationships of the representations of the symmetric groups with particle physics (bringing us back ’round to Littlewood and the Littlewood Richardson rule that appears to be the right abstraction behind elementary particle interactions).

Search for the right conceptual match between mathematics and phenomena, physical and computational. Understand that mathematics is distinct from its instances. And yet our understanding of the abstractions is utterly dependent on knowing more and more about their instantiations. The domains of the physical and the conceptual are distinct and they are mutually supporting.

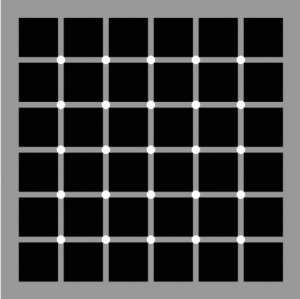

It is very hard to take this point of view because we do not usually look carefully enough at our mathematics to distinguish the abstract part from the concrete or formal part. The key is in the seeing of the pattern, not in the mechanical work of the computation. The work of the computation occurs in physicality. The seeing of the pattern, the understanding of its generality occurs in the conceptual domain.

Conceptual and physical domains are interlocked in our understanding.